Grid-aware computing and the net climate impact of AI

Currents: AI & Energy Insights - June 2025

Welcome back to Currents, a monthly column from Reimagine Energy dedicated to the latest news at the intersection of AI & Energy. At the end of each month, I send out an expert-curated summary of the most relevant updates from the sector.

A note before we start: this issue comes out slightly late compared to the usual schedule. I write these posts in my free time, so while I try to keep it consistent, holiday periods can affect my writing frequency. I’m sure you’ll still find the insights interesting and I have a few more articles in store that I think you'll enjoy. Now let’s get to it!

1. Industry news

Data centres: grid liabilities and assets

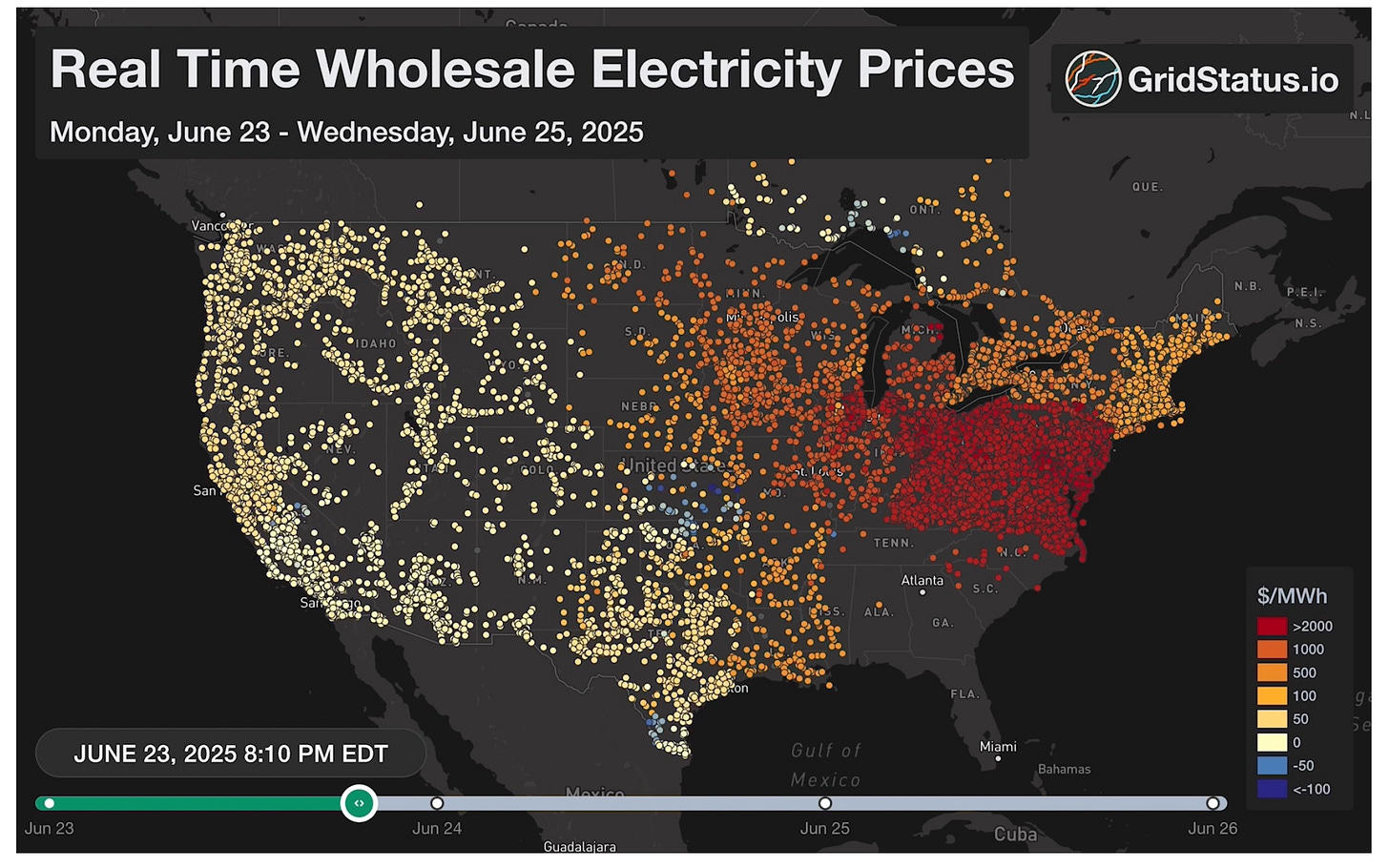

In the last week of June, a heat wave brought extreme temperatures to parts of Europe and the US. In the U.S. several transmission operators, like PJM, NYISO, ISO-NE, and IESO beat last summer’s load peak and challenged or exceeded this summer’s forecast as well.

Reserve margins in PJM (Eastern interconnection) shrank to ~3 %, day-ahead prices in ISO-NE (New England) cleared at >$400/MWh, and Long Island’s real-time LMP briefly spiked above $9,000/MWh. Emergency DR, voluntary curtailments and behind-the-meter solar helped keep the lights on, but also exposed how little controllable load remains on call.

I recommend reading GridStatus’ account of the events if you’re interested in electricity market dynamics.

The first heat wave of 2025 highlighted the escalating, year-over-year stress that extreme weather events place on our grid.

Data center consumption is a main driver of the load growth that’s exacerbating grid congestion. One of the consequences of this is that data center energy optimization is one of the keys to solving the challenges that electricity networks are facing.

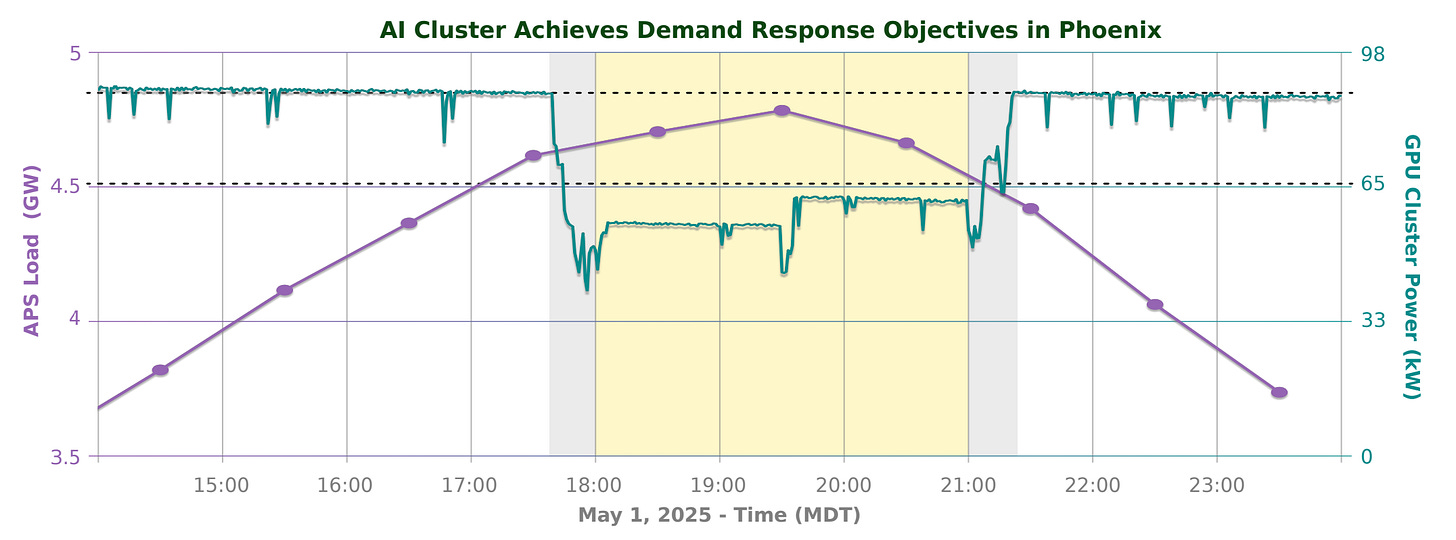

Emerald AI, a startup based in Washington D.C., is trying to tap into this: during a field trial in an Oracle Cloud data center outside Phoenix, they managed to reduce the power consumption of AI workloads running on a cluster of 256 NVIDIA GPUs by 25% over three hours during a grid stress event, all while preserving compute service quality.1

The company, which just announced a 24.5M$ seed round, accomplished this by dynamically managing the different types of jobs running in an AI data center. Less time-sensitive tasks, like model training or academic fine-tuning, can be slowed down or paused. In contrast, critical workloads like real-time inference queries for a popular service aren't delayed but are instead redirected to a data center in a region with less grid stress. The full technical report on Emerald AI’s field trial can be found here.

This is extremely relevant: a recent study from Duke University found that approximately 100 GW of new data center capacity could be connected to the grid by making data centres demand more flexible. The logic is that the electricity grid usually operates with plenty of headroom. Its capacity is really only tested during brief periods of extreme demand, like heatwaves or winter storms. Consequently, there's often sufficient capacity on the existing grid to connect new data centers, provided they are designed to be flexible and can reduce their power consumption during these high-demand periods.

What I’m thinking

Being able to actively shift compute based on grid conditions, electricity price, and clean‑energy availability is key to deploying AI sustainably. With AI becoming more intertwined with energy, grid-aware computing is one of the most exciting spaces to be in, and there’s a bunch of companies doing very interesting stuff. ElectricityMaps for example, just released a carbon-aware scheduler to optimally schedule compute jobs based on the grid’s carbon intensity.

At the residential level, demand-side flexibility is also attracting attention, with Apple Home presenting its new EnergyKit that will allow load scheduling according to prices, and Octopus Energy introducing its first vehicle-to-grid bundle in the UK. This will allow users to access a BYD Dolphin, an EV charger, and access to a smart tariff that offers free home charging for around 300 £/month.

Most companies in the energy space are increasingly aware that a kWh is not just a kWh: when that kWh is being consumed makes all the difference. This, which wasn't that relevant for the past century, will be one of the main trends of the energy sector for the next decades.

If you want to get a grasp on the impact and size of price volatility, I recommend looking at this Python tutorial I wrote a couple of months ago:

Updates on the Iberian blackout

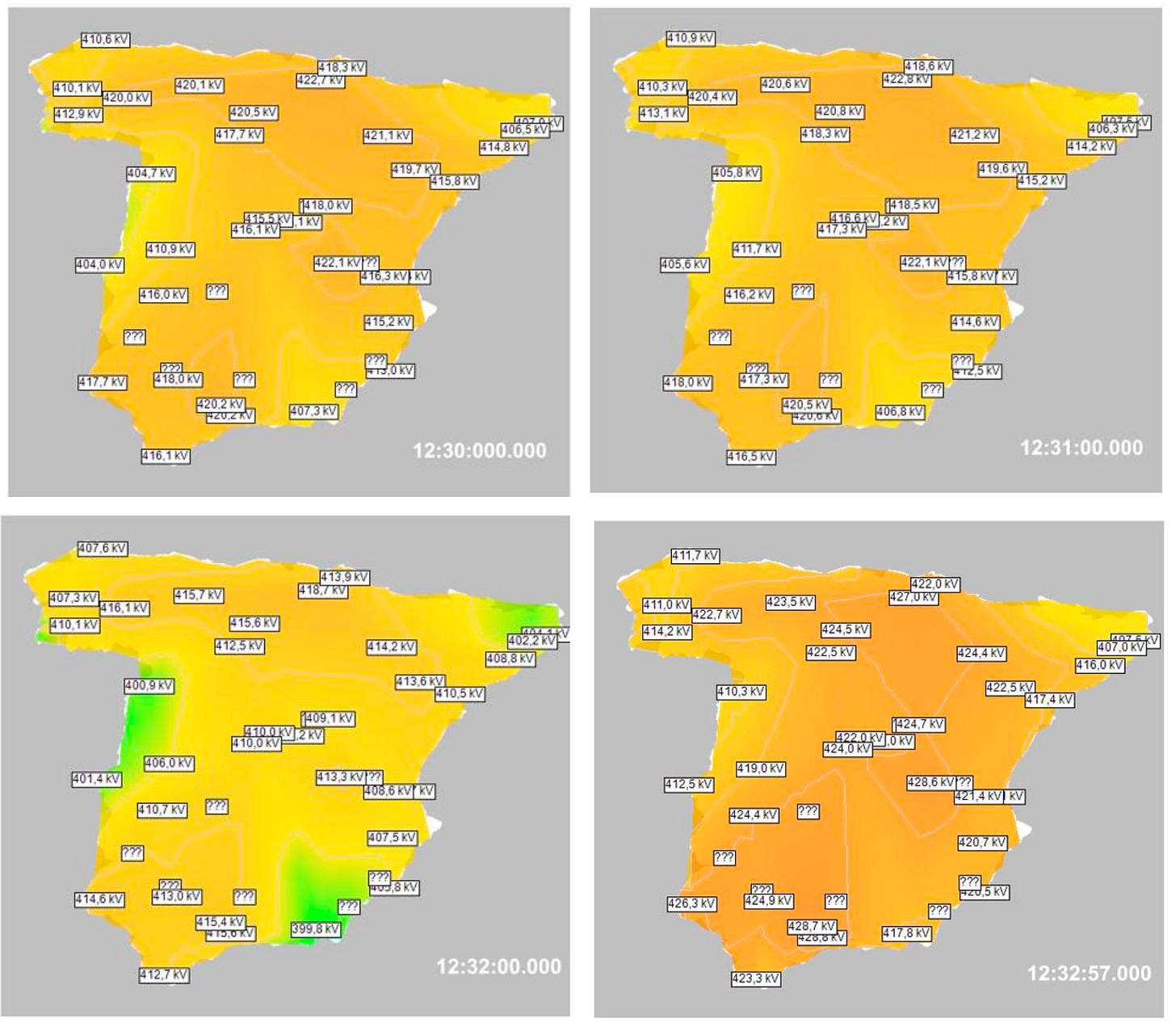

Last month I wrote about the Iberian peninsula blackout and contrasted different theories. Since then, Spain’s official investigations have clarified that the 28 April Iberian blackout was above all a multifactorial over-voltage / reactive-power control failure, not primarily a frequency-inertia event.23

System oscillations in the preceding hours, including an atypical one traced to a specific installation in southwest Spain, pushed voltages higher just as the system entered the critical midday window with insufficient dynamic voltage control. The investigations also noted that one of ten programmed thermal units was declared unavailable on the same day, but was not replaced by Red Eléctrica.

Although the anti-renewable narrative highlighted how renewable-induced low system inertia was to blame for the blackout, the investigations showed that inertia on 28 April actually exceeded ENTSO-E guidance, and Madrid reiterates the blackout was not caused by high renewable penetration.

What I’m thinking

I don’t see how this is an isolated incident and I believe this should be a wake-up call for all system operators. No grid is immune from the changes that are coming, and if you don’t plan ahead, these kinds of events will just keep happening more and more.

Red Eléctrica is under a lot of stress right now. They’re an entity whose sole responsibility is to guarantee the functioning and stability of the network and they’re not being able to do that. We need to start digitalizing our grids now and get creative about solutions because the challenges of modern electricity networks are not going away by themselves.

What is the net climate impact of AI?

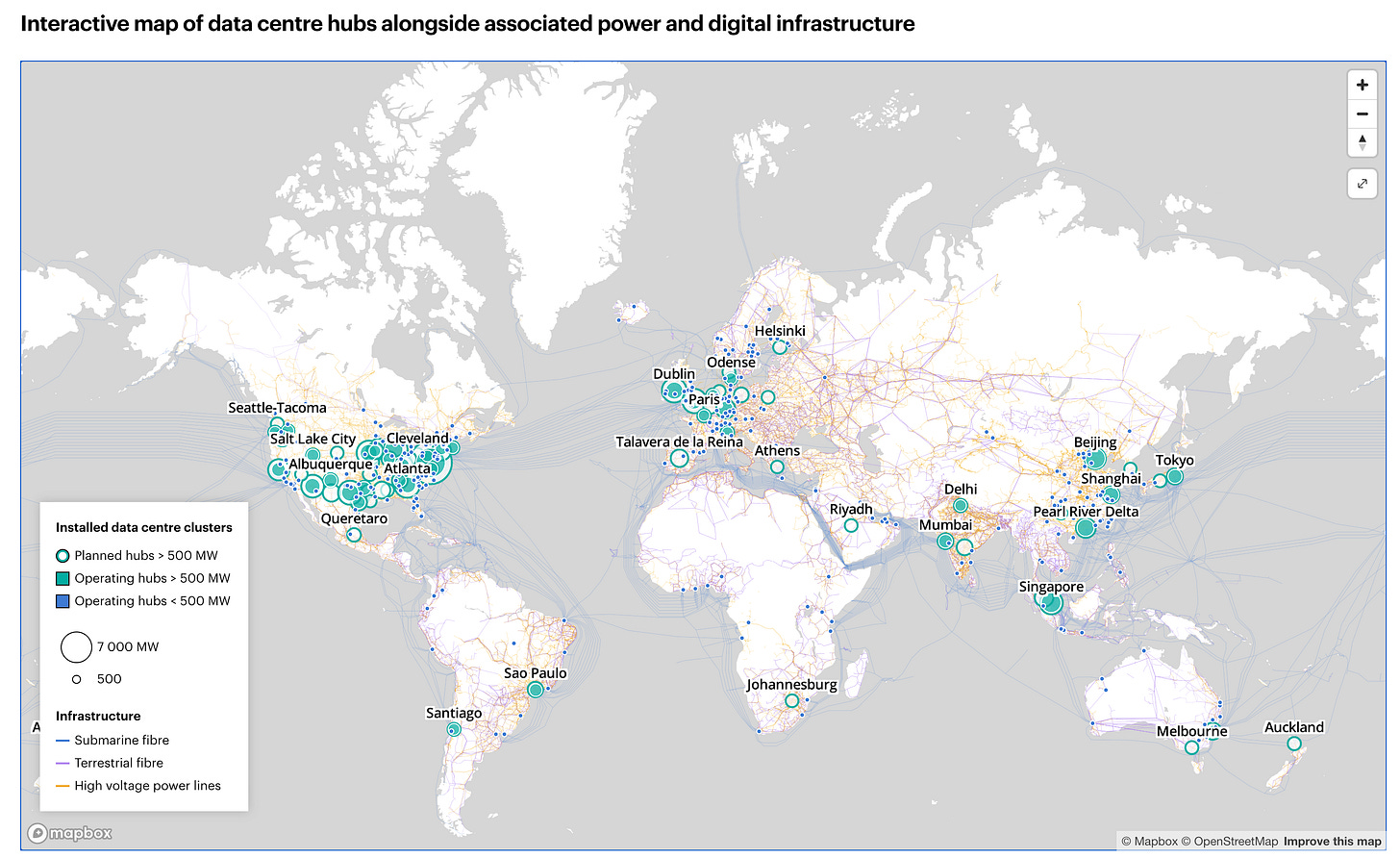

Google’s latest sustainability report showed how quickly AI’s physical footprint is overwhelming corporate climate goals: its emissions are up 51% versus 2019, electricity use jumped 27% year-on-year, and Scope 3 supply-chain impacts tied to data-centre build-out surged 22% in 20244. Even aggressive clean-power contracting can’t outrun physics when growth is exponential and next-gen low-carbon sources like advanced geothermal or small modular reactors remain behind schedule.

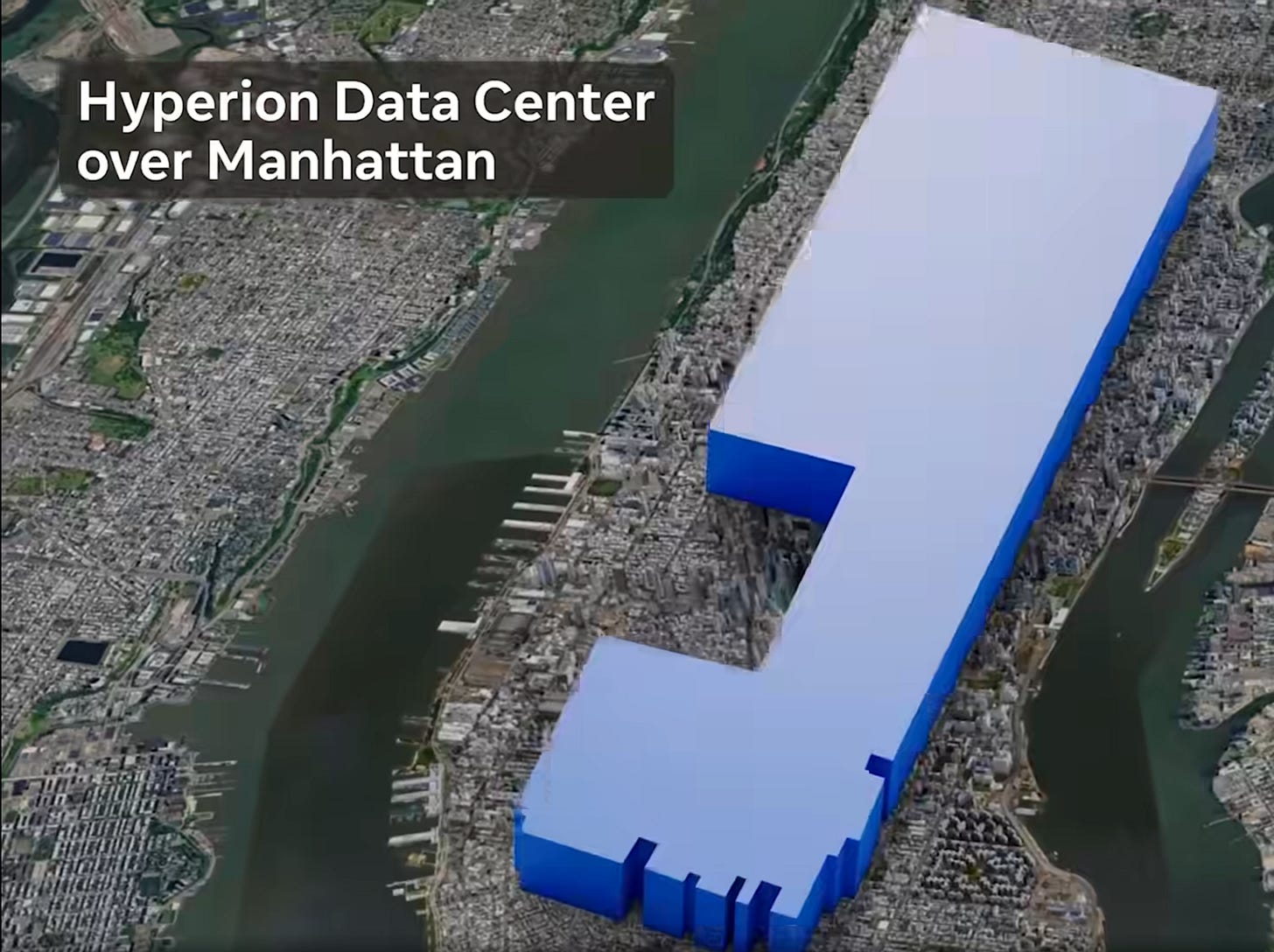

Meta also made headlines with Zuckerberg announcing the construction of Hyperion, a 5GW cluster that will be approximately the size of Manhattan.

I’ve worked for the past 8 years on implementing AI for climate-positive applications, and I often wonder about the balance between the negative and positive impacts of AI on climate. The Net Climate Impact of AI Report by Jennifer Turliuk’s provides a good account of the variables to consider: on the one hand AI’s climate harms (infrastructure, manufacturing, water, even fossil exploration enablement), on the other its potential benefits (grid optimisation, predictive maintenance).

Current literature pegs realistic AI-enabled emissions reduction at perhaps 1.5–4% by 2030. But given the pace of data centre deployment, and considering the impact this will have on clean generation targets, there’s a right to be at least worried about the overall climate impact of AI. The University of Oxford also published a study about this, empirically finding an inverted-U curve: early AI adoption raises energy use and CO₂, but net environmental gains emerge only beyond high spending thresholds (~US$220–580 per capita).

The International Energy Agency is also turning its interest towards AI (who isn’t?). They recently launched the Energy & AI Observatory, that aims to track energy required to power AI, while also documenting AI applications in the energy sector. Give it a look here.

What I’m thinking

Besides the sustainability concerns over AI, which I extensively wrote about in previous articles, the map above shows a growing compute inequality. Most large data centers, those able to train and host state-of-the-art AI models, are concentrated in the U.S., China, and the European Union, leaving several countries from the developing world completely cut out. Coincidentally, energy is one of the reasons for the AI compute divide: in order to have these large clusters operating, governments need to have invested heavily in their energy system, both in terms of generation, transmission, and distribution of electricity.

This seems to be yet another trend that, if left to market dynamics, will increase global inequality and the dependency of countries from Africa, South America, and Asia on global superpowers.

2. Scientific publications

Reinforcement Learning for grid-interactive electrification

If we’re serious about the energy transition, we will have to transform very complex systems such as transportation, the buildings sector, and the electricity network. Traditionally, it’s been very difficult to model these systems with rule-based methods or traditional machine learning, due to their complexity and high number of variables at play. Reinforcement Learning is now offering a promising alternative solution for taking optimal decisions in these complex environments.

This past month three papers about this came out:

“Scalable reinforcement learning for large-scale coordination of electric vehicles using graph neural networks” introduces EV-GNN, a graph neural-network solution that models the complex relationships between EVs, charging stations, and the grid as a graph, which is then used to train a deep reinforcement learning agent to optimize charging.

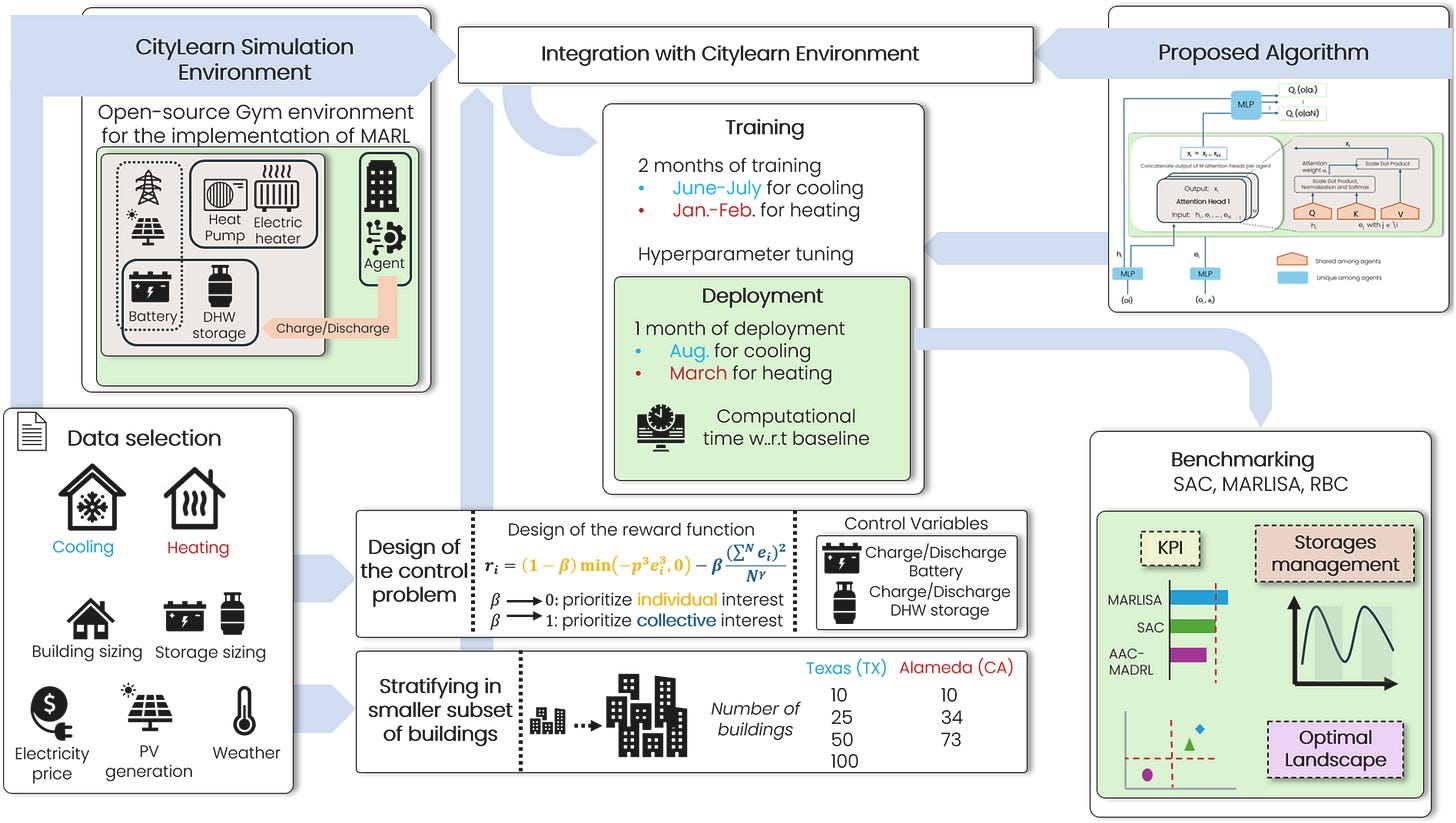

“A scalable demand-side energy management control strategy for large

residential districts based on an attention-driven multi-agent DRL approach” proposes an attention-based multi-agent reinforcement learning algorithm where each building in a district acts as an agent. These agents learn to cooperate in managing their energy consumption, storage, and generation to achieve collective goals like reducing peak demand and minimizing energy costs.

RL2Grid: Benchmarking Reinforcement Learning in Power Grid Operations introduces a new platform for developing and testing RL agents for grid control. The platform aims to provide a standardized and realistic simulation environment for tasks like managing power flow and preventing blackouts.

What I’m thinking

Reinforcement Learning is behind many of the breakthroughs of modern Machine Learning, including the training of state-of-the-art LLMs. In the energy sector, real-world applications didn’t take off yet. This is likely because energy is a critical domain where we need more confidence and research before letting self-learning algorithms take the reins. However, the escalating challenges we're witnessing, like the grid stability problems I covered earlier, mean we are now at a point where we are essentially forced to explore these advanced solutions. Over the next few years I believe we’ll see more and more of these applications moving from research stage to industry.

The code for both EV-GNN and RL2Grid is open-source and available on Github, a great place to start if you want to make it your summer side project to learn how real-world RL agents in the energy sector are built.

Blending Machine Learning and physics in the buildings sector

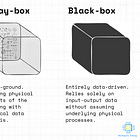

Traditional black-box Machine Learning methods often struggle when applied blindly to the buildings sector: they don’t understand the underlying physical processes that govern building dynamics, they struggle when there’s not enough data, and they lack interpretability, making it difficult for building operators and energy managers to trust their predictions or understand why certain control decisions are recommended.

Three trends that I’ve been following closely and that promise to solve these issues of traditional methods are transfer learning, digital twins, and physics-informed models.

Three papers recently came out last month tackling these topics:

GenTL: A General Transfer Learning Model for Building Thermal

Dynamics. In this paper, researchers built a 3-layer LSTM pretrained on simulations from 450 archetypal Central European single-family houses: a universal source that can be fine-tuned to new targets with only 5–15% of a year’s data. The initial tests from the paper showed an average 42.1% RMSE reduction relative to conventional single-source transfer learning and substantially lower error variance across 144 target buildings.

A digital twin platform for building performance monitoring and

optimization: Performance simulation and case studies. Researchers at LNBL built a modular, open, web-based digital twin platform that fuses live sensor/meter streams, external weather feeds, EnergyPlus physics models, and DER Functional Mock-up Units through an interoperable data pipeline. The platform can be used to simulate how the building would behave in different scenarios.

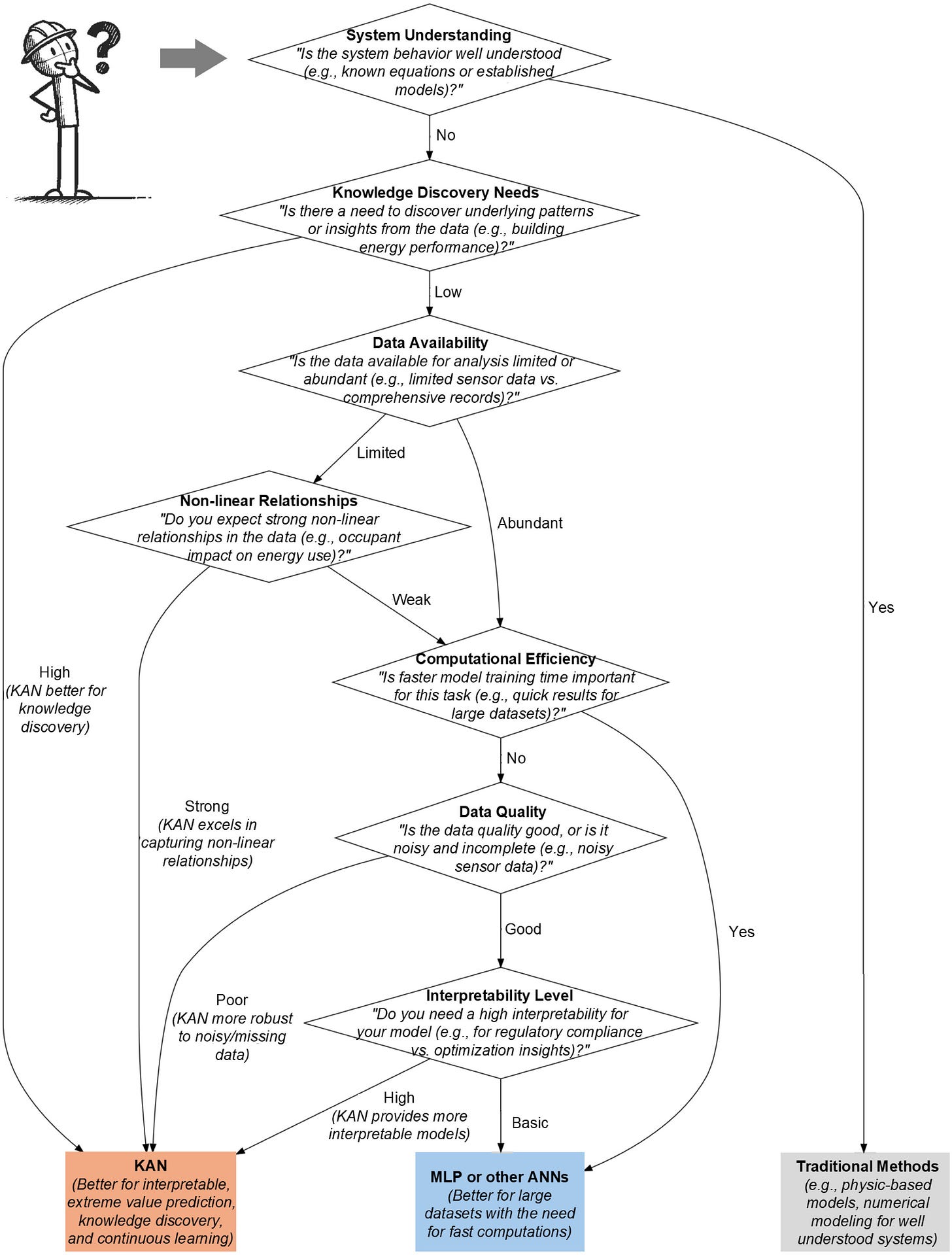

Integrating symbolic neural networks with building physics: A study

and proposal. The authors of this paper explored physics-informed neural modeling and demonstrated that Kolmogorov–Arnold Networks (KANs) can rediscover fundamental equations for heat transfer, approximate complex formulas, and capture time-dependent dynamics. They also provide a handy flowchart to understand when KANs should be used vs other ANNs or traditional physical modeling.

What I’m thinking

At Ento we use a lot of traditional black-box Machine Learning to model the energy consumption of buildings. They’re really great for some of our use-cases, but in situations when:

Indoor sensors data is available

There’s gaps in the data

We want to actively control a building’s HVAC system

… plain black-box modeling often falls short. That’s why I’ve been closely monitoring these trends and I’m excited by the possibilities opened up by digital twin platforms and blending data-driven methods and physical modeling.

If you’re interested in an introduction to trade-offs and characteristics of different modeling strategies in the buildings sector, I wrote a detailed overview in a previous article:

3. Reimagine Energy content

I had fun presenting AI applications in the energy sector at the iEner‘25 conference in Madrid, organized by the Association of Energy Engineers.

Find the slides from the event below (in Spanish):

4. AI in Energy job board

This space is dedicated to job posts in the sector that caught my attention during the last month. I have no affiliation with any of them, I’m just looking to help readers connect with relevant jobs in the market.

Senior Control and Optimization Engineer at EnergyHub

PhD in Machine Learning Techniques Applied To Power Grids at Escuela Politécnica Superior de la Universidad de Alcalá

Conclusion

With so much going on in the sector, it’s not easy to follow everything. If you’re aware of anything that seems relevant and should be included in Currents (job posts, scientific articles, relevant industry events, etc.), please reply to this email or reach out on LinkedIn and I’ll be happy to consider them for inclusion!

https://blogs.nvidia.com/blog/ai-factories-flexible-power-use/

https://www.lamoncloa.gob.es/consejodeministros/resumenes/paginas/2025/170625-rueda-de-prensa-ministros.aspx

https://www.ree.es/es/sala-de-prensa/actualidad/nota-de-prensa/2025/06/red-electrica-presenta-su-informe-del-incidente-del-28-de-abril-y-propone-recomendaciones

https://www.theguardian.com/technology/2025/jun/27/google-emissions-ai-electricity-demand-derail-efforts-green

👏🏾

This piece surfaces a critical shift: AI workloads can become grid assets, but only if we first interpret their elasticity. Today, most data centres treat compute jobs as equally rigid, when in reality, many can flex with no impact on service. In my opinion, the real blocker isn't technical but interpretive. Without a shared "Elasticity Profile" for workloads, we can't coordinate, price, or trust flexibility. Solving this unlocks a quiet and high-leverage tool for resilience & grid-aware AI.