Powering the next decade of AI

Currents: AI & Energy Insights - May 2025

Welcome back to Currents, a monthly column from Reimagine Energy dedicated to the latest news at the intersection of AI & Energy. At the end of each month, I send out an expert-curated summary of the most relevant updates from the sector. The focus is on major industry news, published scientific articles, a recap of the month’s posts from Reimagine Energy, and a dedicated job board.

1. Industry news

How much energy does AI use?

This is a million-dollar question, and one that’s surprisingly difficult to answer. Reporters from MIT Technology Review published a great piece on this recently. I suggest to at least skim through it. It’s a very good introduction to the topic with several intuitive, but at the same time thorough, explanations. The authors measured the GPU energy consumption used for inference by different open‑source models. These were the results:

6700 J (1.8 Wh) for a text response with Llama 3.1 405B

4400 J (1.2 Wh) for an image generated with Stable Diffusion 3 Medium

3.4 MJ (0.9 kWh) for a 5-second video at 16 frames per second using CogVideoX

We don’t have equivalent data for flagship proprietary models unless their owners decide to publish it, but these open‑source benchmarks at least give us an order‑of‑magnitude sense of where today’s AI workloads sit on the energy scale.

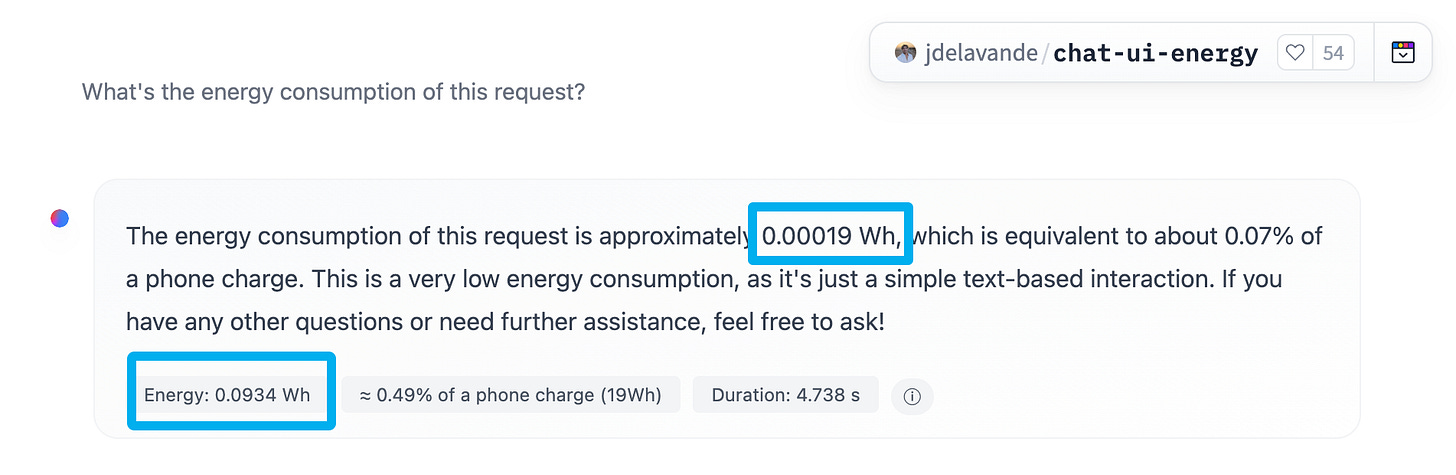

If you want to get an intuition of this, you can try Chat UI, developed by researchers at Hugging Face, where you can see live GPU consumption estimation for different open-source models.

It’s also a great example of the LLM hallucination problem, as you can see in the image below.

If we extrapolate these numbers to multiple billions of daily queries (in December, OpenAI said that ChatGPT receives 1 billion messages every day1) we get to GWh consumption values per day. This does not even take into account the computation used for reasoning models. If we stop for a second and think how we will be soon surrounded by agents continuously running multiple tasks in the background for us, the total energy demand for AI reaches incredibly high numbers.

How much does this amount to today?

A few months back, we analyzed the 2024 report on data center energy usage published by Lawrence Berkeley Lab. The report says that data centers in the US used somewhere around 200 terawatt-hours of electricity in 2024, roughly what it takes to power Thailand for a year. AI-specific servers in these data centers are estimated to have used between 53 and 76 terawatt-hours of electricity. On the high end, this is enough to power more than 7.2 million US homes for a year.

What does the infrastructure required to support this look like?

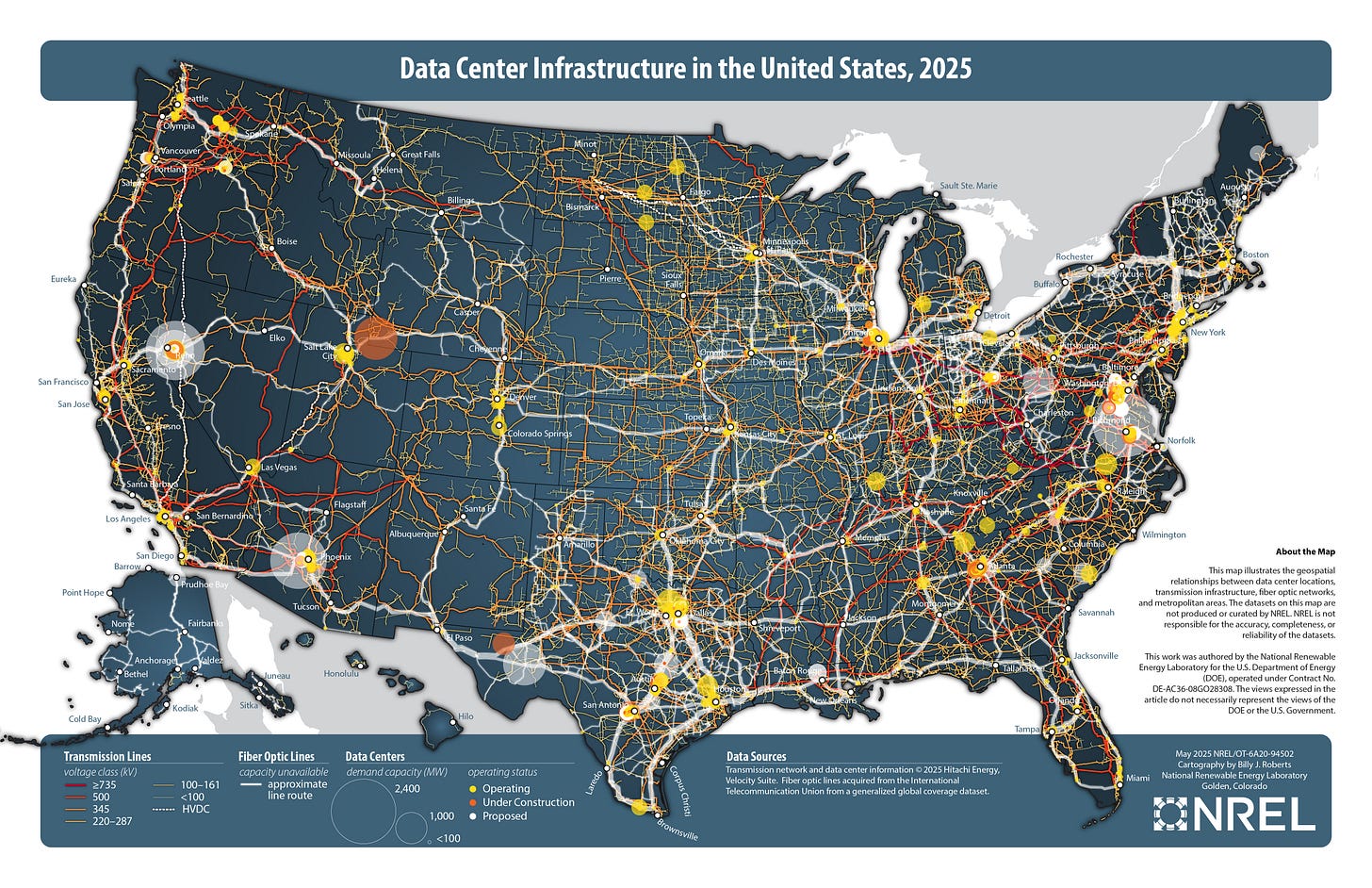

As I’ve mentioned before, I really love a good map. And this one from NREL is worth more than a thousand words. Note how the average data center size increases considerably from existing facilities (yellow), to those under construction (orange), to proposed ones (white), with the latest reaching 2.4 GW, close to the entire generation capacity of Iceland2.

Beyond electricity consumption, what about water use?

Aside from the energy requirements, data centers also need significant water for cooling their circuits. Most large data-centers cool their servers with evaporative systems, meaning that, while water is recirculated, in each loop some water is lost to evaporation. To get an idea of the ballpark numbers, Google’s data centers consumed around 23B liters3 (6B gallons) of water in 2024, that’s just about the water used in a year by the country of Cabo Verde4. A recent investigation from Bloomberg News found that about two-thirds of new data centers built or in development since 2022 are in areas experiencing high levels of water stress5. And the trend seems to continue, with OpenAI and the U.S. government recently signing a deal to build massive data centers in the United Arab Emirates, starting with an AI campus in Abu Dhabi6. How does Abu Dhabi fare in terms of fresh water supply? Not well, as you might imagine.

Seawater cooling might sound like an obvious solution, but the salt quickly ruins ordinary pipes, meaning that pricey corrosion-proof infrastructure is needed. The raw intake also comes packed with sand, algae and marine critters that need to be filtered.

Powering the next decade of AI

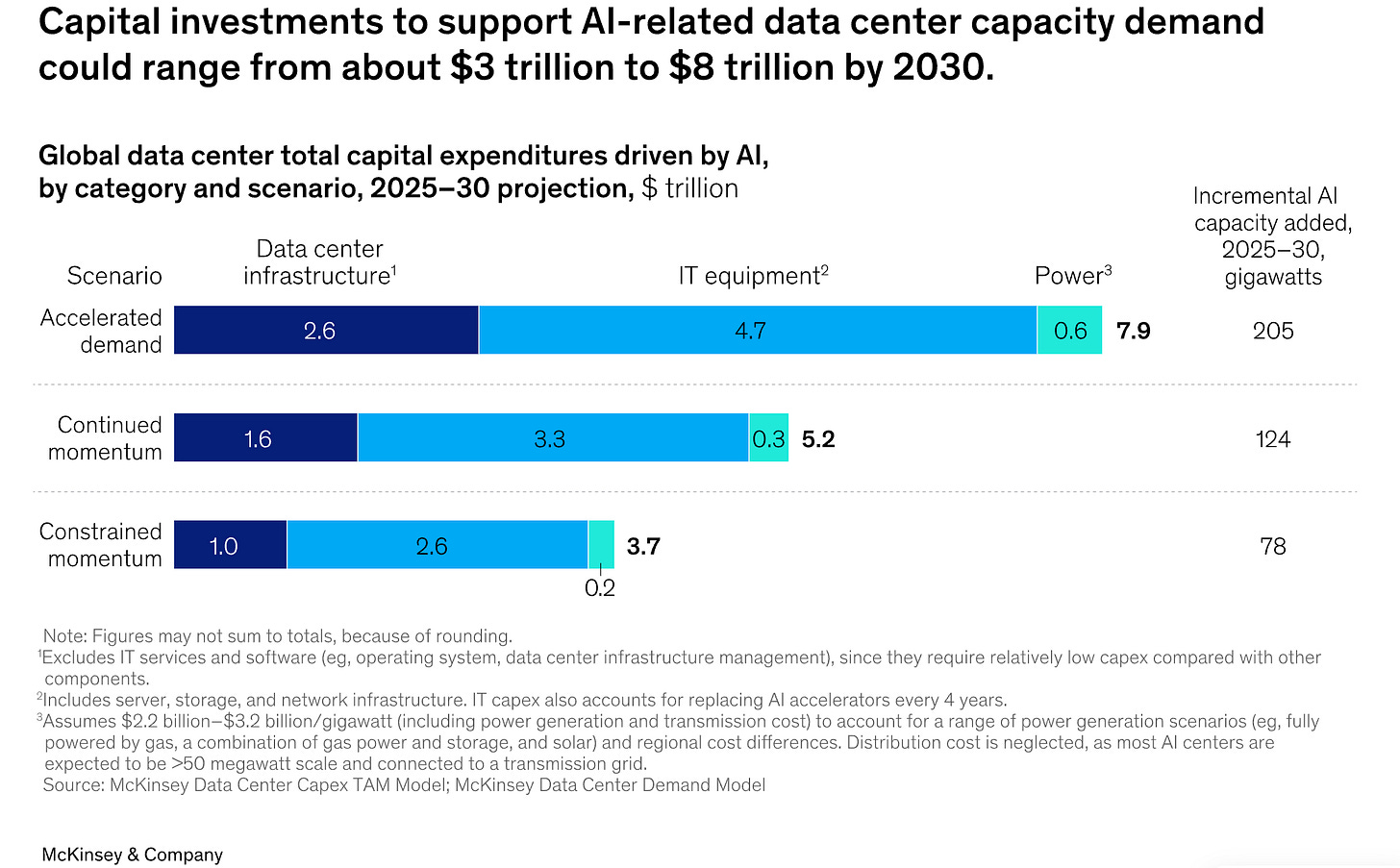

When we look at data center consumption trends, the numbers are enough to scare any system operator. We might reach the 1,000 TWh of data centre consumption by 20307. McKinsey recently said that the global cost for scaling up AI infrastructure might be as high as $8 trillion, with more than $500 billion dedicated to power generation and transmission alone8.

Even knowing the price tag, the path to get there is anything but clear. Tens of GW have to be deployed each year, while also upgrading the grid infrastructure to be able to handle increased demand levels. Can this practically be achieved?

Nuclear Renaissance

Nuclear energy has been a very hot topic for the last few months: it’s carbon-free, it’s reliable, and, unlike renewables, it has support across the political spectrum. The Trump administration just signed four executive orders aimed at quadrupling America’s nuclear capacity over the next 25 years, bringing it from around 100 GW today to 400 GW by 20509. There is no clear path on how to do that, although the executive order mentions that 10 new reactors with completed designs must be under construction by 203010. The private sector is also gaining momentum: NuScale Power just won US approval for small nuclear reactors design, and Google announced a partnership with Elementl Power for three nuclear projects that will each produce 600 MW.11

While nuclear will certainly play an increasingly relevant role in the long run, it’s clear that the timelines don’t match. The AI compute increase is happening today, while these nuclear projects will take years, if not decades, to be operational.

Power companies are increasingly aware that, to sign large PPA contracts with big tech firms, the only viable way to scale production quickly is through a combination of renewables and natural gas. This is clear if we look at some of the latest deals in the space:

Renewables

Brookfield and Microsoft partner for 10.5 GW of renewables (May 2024)

Google, Intersect Power to develop co-located energy parks with $20B of renewables + storage (Dec 2024)

Amazon signs agreement to buy 476 MW of renewable power from Iberdrola in Spain (Feb 2025)

AES and Meta Sign Long-Term PPAs to Deliver 650 MW of Solar Capacity in Texas and Kansas (May 2025)

Natural gas

Constellation acquires Calpine’s gas fleet (27 GW) for $16B (Jan 2025)

Vistra acquires 2.6 GW of natural gas generation assets for $2B (May 2025)

There have been two large acquisitions of existing natural gas assets in May, but in order to supply the incoming electricity demand increase, it’s clear that new gas-fired power plants will have to be built.

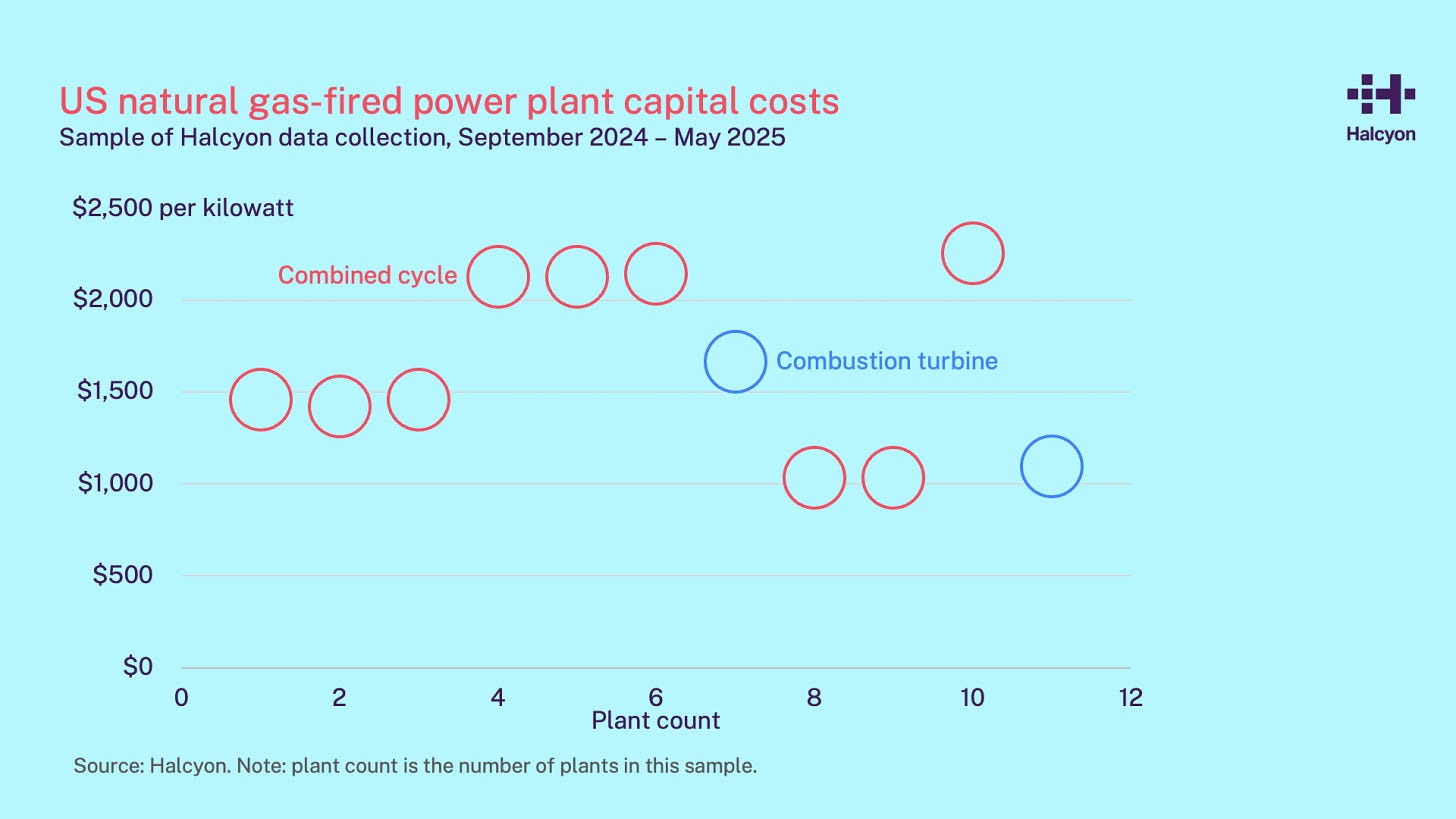

We can expect building new assets to cost more than acquiring existing ones, but how much more? Halcyon, a San Francisco-based company building an AI-assisted search and information platform for the energy sector, just announced their latest product, a US gas power plant tracker, which includes insights like the one below, which I found really interesting.

The acquisition prices outlined before are between 500$/kW and 1000 $/kW, buying existing assets costs then between one-fourth and one-half of what it costs to building them from scratch. But buying existing assets can only temporarily meet demand, and with solar's intermittency and regulatory challenges facing wind, it looks like we’ll see more and more gas-fired power plant buildouts over the next few months and years.

Geopolitical stakes

The interconnection of AI and energy has also implications on the geopolitical level. There is a global race towards achieving superintelligent AI. Both the U.S. and China understand that having ample energy for computing will grant a strategic edge in developing advanced AI, and potentially shift the global balance of power in their favor. OpenAI and other AI giants are using this as leverage to pledge faster data center build-out and more investment in grid infrastructure and energy supply. “Eventually the abundance of AI will come down to the abundance of energy, and in terms of long-term strategic investment I can’t see anything more important than energy” Sam Altman told a Senate committee, on May 8.12

At the same time, the U.S. has been forging AI-Energy alliances with oil-rich partner countries: a series of deals with Gulf states (Saudi Arabia, UAE, Qatar) aim to channel petrodollars and energy into building AI supercomputing centers aligned with U.S. interests.

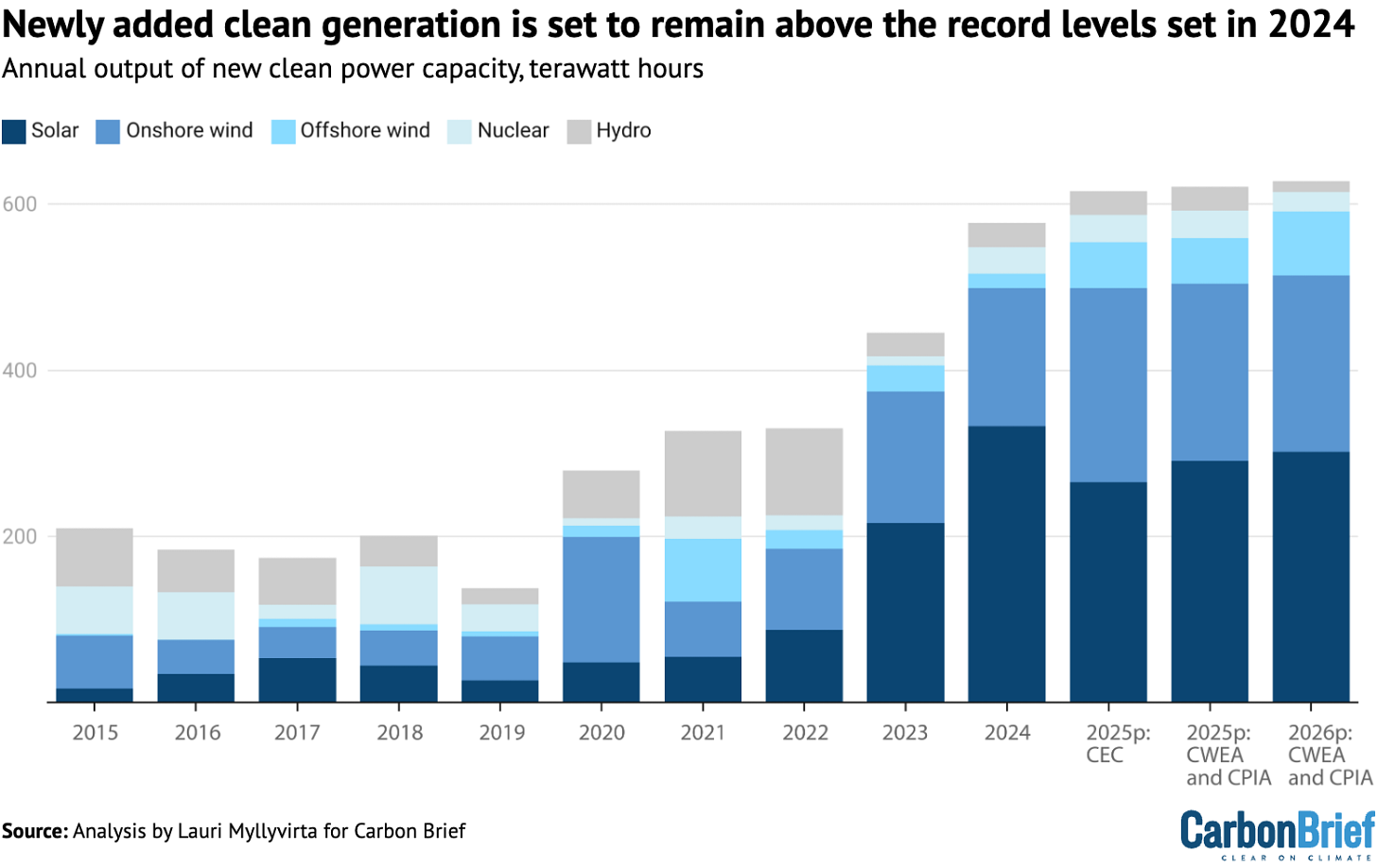

While China seems to be slightly behind on chips, they also have a substantial lead on the clean energy generation side. According to a recent Carbon Brief analysis, China is set to produce over 600 TWh of electricity from newly added clean energy sources in 2025. For reference, the total US renewable generation capacity, including hydro and nuclear, is about 500 GW, and China added 375 GW of new capacity in 2024 alone, 75% of the US total clean generation capacity.

What I’m thinking

The race for global AI supremacy is on, and this will have profound implications on the energy sector over the next decade. With billions of dollars and geopolitical dominance on the line, companies are already turning to illegal practices to power their clusters13, rethinking their climate pledges14, and relaxing their safety policies15.

It looks like decarbonization and climate are now being sidelined. I expect this trend to only increase over the next few years. I mentioned this before and stand by it now, the only way in which we can effectively reduce emissions, in a world where abundant energy means having an edge in a global superpower conflict, is by making clean energy technologies make economic sense. I don’t see a future where we “voluntarily” reduce emissions while the geopolitical stakes are so high.

2. Scientific publications

Enabling flexible consumption in schools with Model Predictive Control

Field implementation of model-based predictive control in an all-electric school building: Impact of occupancy on energy flexibility: This study presents a field implementation of a grid-interactive model-based predictive control (MPC) system in an elementary school in Montreal, Canada. Researchers compared eight classrooms during cold winter days: four using a standard PI controller and four using the MPC.

During morning peak hours, when electricity is most expensive, the classrooms controlled by the MPC reduced their average power consumption by 61% compared to the classrooms running on a standard controller.

What I’m thinking

Schools are generally a great place to implement smart control algorithms, due to their very predictable occupancy schedules. At Ento, it’s been also been one our main targets for our control product.

Besides the standard heating output optimization based on weather and occupancy, I found it interesting that the authors were able to take into account the implicit demand response signal embedded in the utility’s electricity rates. With electricity rates becoming increasingly more volatile due to renewable penetration, having buildings that are able to intelligently adjust their consumption based on those signals is extremely valuable, and should eventually become a standard outside of research projects.

New techniques to slash the computational cost of LLM reasoning

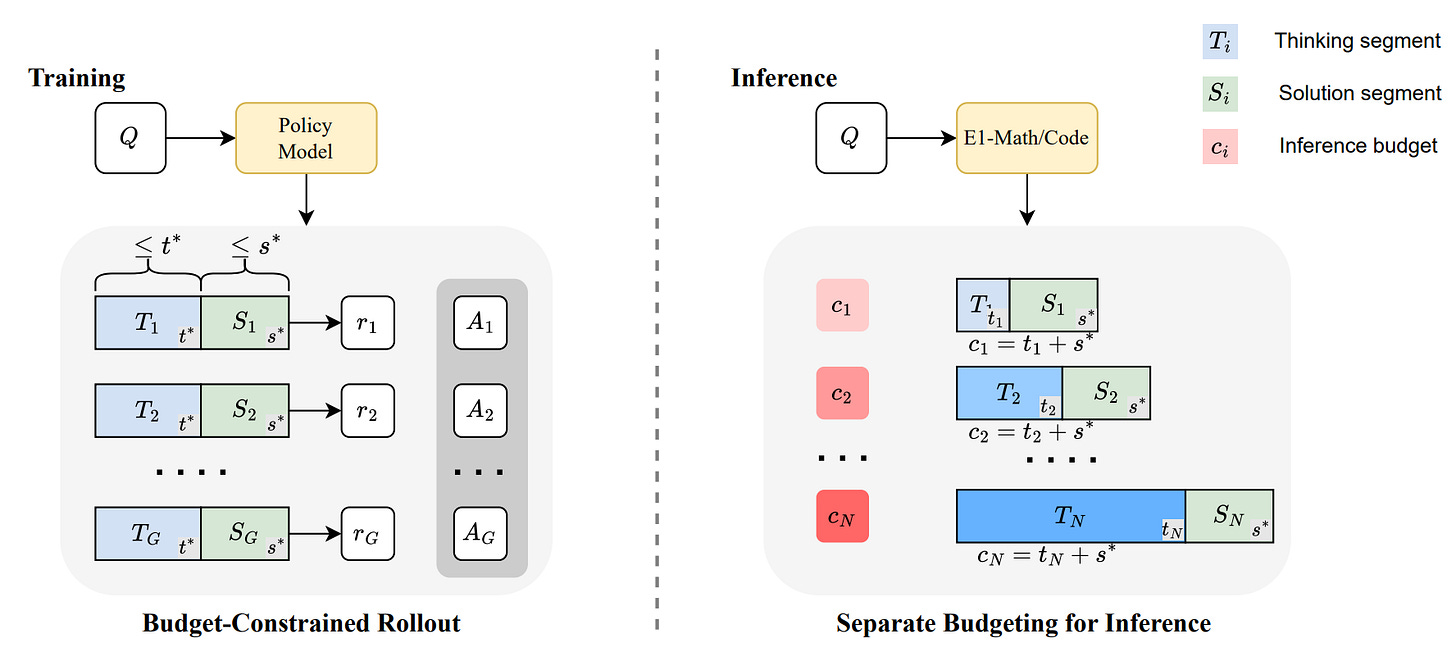

Two papers from researchers at Salesforce explore a pragmatic solution to the high computational cost of complex reasoning. While Chain-of-Thought (CoT) prompting significantly improves performance on difficult tasks, the "thinking" steps make it slow and expensive. These studies show that you don't always need the full reasoning path to get the right answer.

The first paper, Fractured Chain-of-Thought Reasoning, introduces an inference-time strategy called Fractured Sampling. It breaks down reasoning into three controllable dimensions: the number of reasoning paths, the number of final answers per path, and the depth at which the reasoning is "fractured," or truncated. Through experiments on complex math and logic benchmarks, the authors demonstrate that exploring multiple partial reasoning paths is far more cost-effective than generating a few complete ones.

The second paper, Scalable Chain of Thoughts via Elastic Reasoning, proposes a training methodology to make models robust to this truncation. Elastic Reasoning explicitly separates the model's output into a "thinking" phase and a "solution" phase, each with its own token budget. Using reinforcement learning, they train the model to generate high-quality solutions even from incomplete thoughts.

What I’m thinking

Most of the energy consumption of LLMs comes from the inference phase, and CoT models have radically increased those costs. Any technique able to reduce computation while keeping quality to high standards should be explored. If we consider the scale at which LLMs are going to be used, such radical efficiency gains on every request would be more impactful than any power generation project that can be implemented. Coming from the building energy efficiency field, I see a very clear analogy: clean energy generation is important, but anything that can reduce energy use by increasing efficiency should be prioritized.

Code is available on Github and Hugging Face if you want to try it out.

3. Reimagine Energy publications

No full-length article this last month, but you can check out this LinkedIn post. It provides a good overview of the Python tutorials I released until now for solar production analysis.

4. AI in Energy job board

This space is dedicated to job posts in the sector that caught my attention during the last month. I have no affiliation with any of them, I’m just looking to help readers connect with relevant jobs in the market.

Staff Machine Learning Engineer at Sunrun

Data Engineer - Platform at Flower

Cloud Engineer (GCP) at GridEdge

Summer Internship - AI-Powered Climate Tech at TetraxAI

Postdoc position in energy & AI at KU Leuven

Postdoctoral Research Fellow (Multi-Scale Energy Systems) at NUS

Conclusion

With so much going on in the sector, it’s not easy to follow everything. If you’re aware of anything that seems relevant and should be included in Currents (job posts, scientific articles, relevant industry events, etc.), please reply to this email or reach out on LinkedIn and I’ll be happy to consider them for inclusion!

https://x.com/OpenAINewsroom/status/1864373399218475440

https://en.wikipedia.org/wiki/Electricity_sector_in_Iceland

https://www.aa.com.tr/en/world/google-data-centers-used-nearly-6b-gallons-of-water-in-2024/3478721

https://www.worldometers.info/water/

https://www.bloomberg.com/graphics/2025-ai-impacts-data-centers-water-data/

https://www.nytimes.com/2025/05/22/technology/openai-uae-data-centers.html

https://www.reimagine-energy.ai/i/158224512/the-twh-problem

https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/the-cost-of-compute-a-7-trillion-dollar-race-to-scale-data-centers#

https://www.nytimes.com/2025/05/09/climate/trump-draft-nuclear-executive-orders.html

https://www.whitehouse.gov/presidential-actions/2025/05/reinvigorating-the-nuclear-industrial-base/

https://apnews.com/article/google-elementl-nuclear-power-artificial-intelligence-2bd4282af728e16446bdcefe97d37873

https://www.politico.com/newsletters/power-switch/2025/05/09/ai-giants-bring-their-energy-pleas-to-congress-00339012

https://www.theguardian.com/us-news/2025/apr/09/elon-musk-xai-memphis

https://www.wsj.com/articles/microsoft-feels-the-heat-as-carbon-negative-goal-looms-nearer-cbf20c2f?mod=energy-oil_news_article_pos4

https://fortune.com/2025/04/16/openai-safety-framework-manipulation-deception-critical-risk/

Great insights!

I don't know if you saw Sam Altman commenting on the energy and water use of OpenAI models directly in his blog post:

https://blog.samaltman.com/the-gentle-singularity

TLDR 0.34 watt-hours electricity / query + 0.000085 gallons of water (1/15 of a teaspoon)

Great insights as usual! Very cool solution for schools https://www.ento.ai/product/ento-control?utm_source=substack&utm_medium=email.